How to Successfully Deploy Kubernetes Apps with Terraform

Admit it, at one point you may have attempted to get a Kubernetes application deployment working with Terraform, got frustrated for one of many reasons and gave up entirely on using the tool in favor of other, less finicky solutions. At least, that is exactly what I did. That was then, and now is now. With multiple updates to both the helm and Kubernetes providers, it is time to re-evaluate how you can use Terraform for deploying some of your workloads.

How to deploy custom workloads

It is fairly common to see managed Kubernetes cluster deployments being done with the venerable infrastructure as code tool, Terraform. Jenkins-x will even create cloud-specific Terraform manifests to stand up new environment clusters. These deployments are driven by cloud specific Terraform providers, which are maintained and updated via their respective cloud owners. For a primer on how to deploy a managed cluster for one of the three "big clouds," hit up their resource documentation and find examples online. I’ll not cover that aspect of the deployment, but here are some good starting points:

- Azure: AKS Terraform Resource

- AWS: EKS Terraform Resource

- Google: GKE Terraform Resource

What is less commonly talked about is using the terraform Kubernetes provider afterward to deploy custom workloads. Usually, once the initial cluster is constructed, the deployment of workloads into the cluster tend to fall to other tools of the trade like helm, helmfile, kustomize, straight YAML, or a combination of one or more of these technologies. Kuberentes is just a state machine with an API after all, right?

I’m going to go down the rabbit hole of how one can use Terraform to not only deploy the cluster, but also to setup initial resources – such as namespaces, secrets, configmaps – and put them all into a deployment via the Kubernetes provider. And we will do this all with a single state file and manifest.

NOTE: We can also do custom helm deployments via the helm provider but that is not the purpose of this exercise.

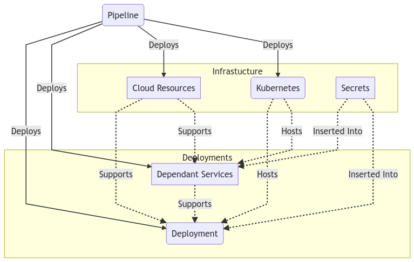

Here is the obligatory diagram showing some component parts of a multiple part deployment into Kubernetes.

Why and when to to deploy the cluster

Why would you want to do such a thing? Well, I’m glad I asked myself that question very loudly and with a slight tone of derision to my voice. Honestly, you likely don’t want to do this, at least not for every project. But there are some sound reasons. My reasons were fairly nuanced. I needed to quickly convert a custom Kubernetes deployment into a pipeline that would fully stand up a Kuberentes cluster with the deployment so it could be run on-demand and shutdown after it was done processing.

- There is a need to take some snowflake Kubernetes cluster app and turn it into a pipelined deployment for multiple environments

- You must be able to ensure that a deployment state is always the same

How to do it - Part 1

In my case, I already had a bespoke deployment running in a cluster that I needed to mimic. So I used an existing tool that was able to generate a good deal of the Terraform starting manifest automatically. It turned a manual slog into a fairly rapid affair. The tool is called k2tf and it will convert Kubernetes yaml into Terraform. This is a great starting point, one that I recommend you start at if your situation allows.

How to do it - Part 2

The Terraform Kubernetes provider exposes most of the attributes of the resources it generates via the metadata of the resource. This makes chaining your resource dependencies very easy. Let's look at a partial example that I’ve pared back here for brevity sake.

https://gist.github.com/zloeber/d01a6dde6bce82b216b6631d2ed0d612

As you can see, we create the namespace, a secret, a configmap, and connect them all to a deployment. I purposefully included the config map in this deployment as it was rather hard to find an example of one in action via terraform HCL.

For a fuller example of a terraform deployment in tandem with an Azure AKS cluster check out my repo. I include some additional nuances around permissions that are also rather difficult to find, for whatever reason.

Be on the lookout for these issues

Let's take a look at some possible issues that you may encounter, and a few suggestions on how to work around them.

- Using the Kubernetes provider is considered a faux pas in Terraform land when used alongside a cluster deployment. This is usually due to provider dependency issues (because Terraform providers don’t adhere to any kind of dependency rules). Because of this, it is recommended to do deployment and cluster construction in separate manifests and thus, separate state databases. We work around this mostly by the way we configure the provider to eat output from the cluster creation resource. This may lead to race conditions and other issues if not carefully managed.

- We may have to run the Terraform apply multiple times to get the deployment to go through if you decide to implement a helm chart concurrently (as with the Kubernetes provider, the same is true for the helm provider). Moreso, we must run multiple times when using Azure ACR as helm private repositories it seems. These private repos seem to have an abysmally short-lived authentication token that can time out well before the cluster has been created. This means the helm deployment is likely to not find the chart when it comes time to actually deploy it. You can get around this by including custom helm charts local with the deployment or with carefully crafted null_resource scripts to run azure cli commands and depends_on constructs.

- Cluster destruction takes far longer than construction, from my experience. My theory is that all the resource finalizer annotations that cloud providers like to force upon us cause the destruction of resources to hang to the point of hitting some kind of internal cloud provider timeout. That’s just my personal theory, though. Obviously your experiences may vary.

- Kubernetes finalizers are the hidden surprise of resource redeployments. Be aware that they exist, that some operators like to use them, that some cloud providers will use them, and that they can and will prevent you from deleting or redeploying resources in your clusters. Know how to clear them out in a pinch.

Wrapping it up

While Terraform may not be the best solution for maintaining state in Kubernetes clusters with fluctuating workloads (where you may not have an expected state from day to day), it is a perfectly sound solution for known workloads and can be easily adopted for use in ephemeral cluster deployments.

Ready for what's next?

Together we can take the first steps to elevate your cloud environment. Start your hands-on learning right away by joining our next AWS Serverless Immersion Day.