Hugo – A DevOps Approach

I’ve updated my site to use Hugo some time ago. Recently I’ve found some time to add a deployment pipeline for this site as well. This short article will cover how the pipeline code works and dive into multi-stage pipelines.

Introduction

As I work in the industry I sometimes forget to update the world on what I’m doing. It has been almost a full year since I last posted anything, so it was long past time to get more content out there. Problem was, I forgot how to even deploy to my own site after such a long duration. This time I figured I’d just setup a proper pipeline to get things going again.

Here is the stack I’ve landed with for this website:

- Static Site Generator: Hugo

- DNS Hosting: Cloudflare.com

- CDN: Cloudflare.com

- DNS Registrar: Namecheap.com

- Git Hosting: Github.com

- Web Content Hosting #1: Github Pages

- Web Content Hosting #2: Render.com

- Pipeline Platform: Azure Devops

The only cost in all of this is the custom domain name and some time.

The Process (TLDR)

End to end, the process for this looks a bit like this:

- Purchase your custom domain name (namecheap.com) NOTE: this is the only cost for this entire setup and is not strictly required

- Optionally, transfer the domain name to Cloudflare.com (They are not actually a registrar you can buy domains from but you can transfer to them as a hosting registrar once you own one, it seems.)

- Create new private repo in GitHub for authoring content (ie. yoursite.com)

- Optionally, setup a GitHub ssh key to make your life easier.

- Create a new public repo in GitHub for publishing your production site (ie. yoursite.com-content).

- Create a new public repo in GitHub for publishing draft/development content (ie. dev.yoursite.com-content)

- Create a new public repo in GitHub to host your Azure DevOps (ADO) pipeline template code

- You may be able to reference my repo but that may not be wise as I may update things at anytime.

- Download Hugo binary

- Create new Hugo site

- Initialize local git repo in the generated site folder

- Add submodule for some Hugo theme to the Hugo site

- Add content from 10+ years of blogging…

- Push repo to GitHub authoring site as the upstream source

- Add a ‘develop’ branch for the dev version of your site

- Push up your develop branch

- Create personal azure devops account (dev.azure.com)

- Create a service connector to GitHub and authorize access to all your repos

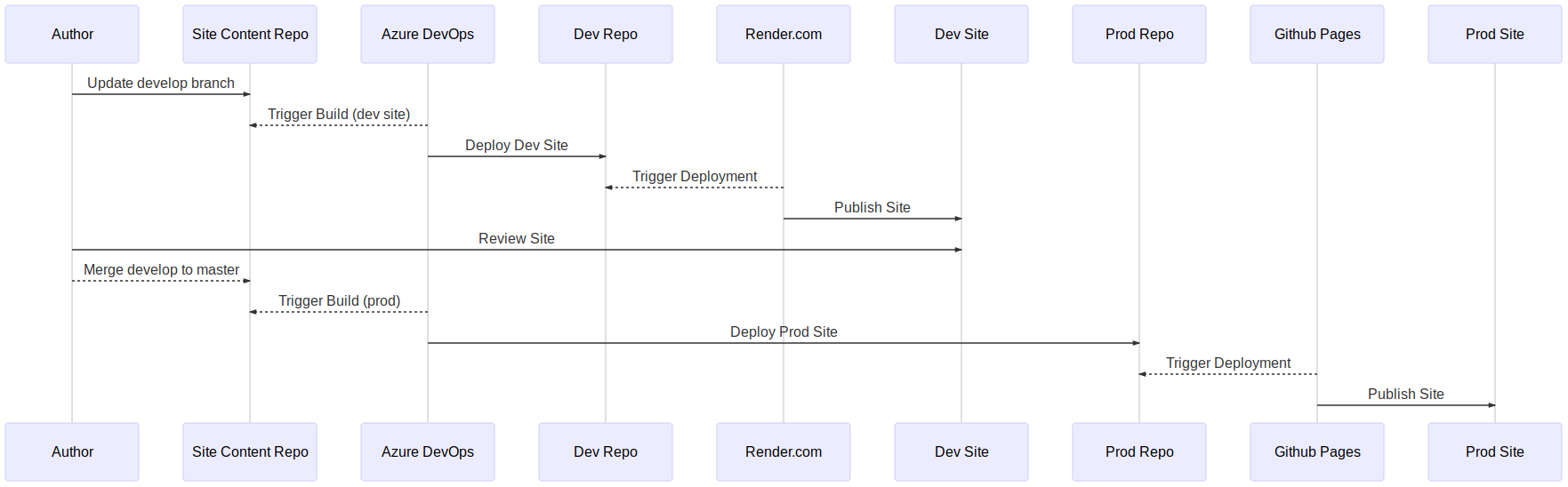

This will set the stage for you to add content to your site in a development environment for review, then approve for release. The workflow for this will be a bit like the following sequence diagram when complete.

To make any of this work we need a few pipelines in ADO. One to create our Hugo site content as an artifact and another to shove the deployment content into our repositories. We will use Azure Devops multi-stage pipelines and templates to get this in place.

The Hugo Site

First setup your Hugo website. I’m not going to rehash what is already well documented at the Hugo site. You will basically get the binary, run a Hugo new command, initialize it as a git repo, and then add a theme as a git submodule. If you are desperate for a site to start with, I suppose you could clone my slide deck site. It includes a nice makefile, render.yaml, and azure-pipeline.yml you can modify to suit your needs. But you really should spend a few cycles to just make your own site so you can get familiar with the site layout and some of the methodology behind how Hugo works.

On Git Submodules: I personally avoid git submodules. Submodules tend to obfuscate a git repo’s scope and complexity. Few developers I’ve worked with know to check for them or how to update them. They are also far more difficult to remove than I believe they should be. But in this case it DOES make working with upstream themes much easier.

The Pipeline

I’ve found that the best way to start working on a new pipeline is to declaratively layout the required manifest. Even if it is pseudocode, throw together some YAML declaring what you would like the finished state to look like. Once you have this, adding the template components is pretty easy.

On Authoring Pipelines: If you start with the imperative code first (which I have done before), you may as well just throw it all into a Makefile and then pluck out the parts you need for your pipeline pieces later. But I promise you will be doing more work this way than you might otherwise need to do. If you do your DevOps work with an end state in mind in a declarative manifest, everything else will fall into place, I promise.

My current site azure-pipeline.yml code looks very similar to what I initially put down for my first draft.

You will notice that I have stages run only for specific branches and that each branch deployment code points to a different GitHub repository. This is one way to manage this process and works well enough for me. It can be used to integrate well with a variety of git branching models but you lose a bit of visibility when entire stages are skipped over within the pipeline GUI.

The Code

Using the deployment above we can infer that a build and a release template will be required. So I created them and put them in their own repo for future site usage.

I found it best to break down my templates based on build and deploy first, then go further into jobs or steps as I progress. Here is the Hugo build pipeline.

This builds the site according to your site config.toml file which should include most of your settings. I also allow for different content and output paths.

And the deployment stage takes the packaged artifact (tar.gz file) and extracts it into a specificed git repo folder of your choosing. This assumes the repo is in GitHub as well and that your created service endpoint for it has access. The release pipeline WILL clear out and overwrite anything in that folder then force pull the changes.

In our case, we shove the output of Hugo into a repo’s docs folder to make it easier to publish via GitHub pages later (a manual config setting on the repo btw). The release pipeline is pretty simple and also defines an environment which also allows you to setup some gating around final releases if you so desire (bleeding edge ADO manual setting currently).

The hardest part of all of this was figuring out how to pass the service connection authentication through to the deployment stage. We force another checkout of the current repo to ensure that the proper authentication headers are in place (which we then strip out for our own use). Otherwise you can also use a PAT and maunually add in a username/password via environment variables in the pipeline. I opted not to do that to ease manual efforts a little.

Oh, I also broke down a few other steps for repeat use. This includes htmltest and Hugo binary installs well as the htmltest run itself. This was largely to make things a bit more clean and composable. But honestly, it was more to have additional Lego pieces in my arsenal for future pipelines 🙂 You can find them in the pipeline repository referenced earlier.

Gluing It Together

In Azure DevOps, select your profile and go to preview features to enable the multi-stage pipelines view as that is what we will be using. We need to create a service connection in Azure DevOps with access to the GitHub repositories as well. I like to call this ‘github_services’ . This will be used for all aspects of the pipeline deployment so ensure it has access to all the repos involved (or just give it full access if you do not care so much). There are no secrets or environment variables added to the deployment other than this connector.

We will have to setup your domain forwarding for custom domains to render.com or GitHub based on how you choose to host your site. I use both for my blog because I’m a dork. For my slideshow Hugo site I just use render.com (but use the same pipeline code across both)

When your site structure is ready you are ready to publish some content.

Publish The Test Site

To create and publish a draft post just ensure you are on the develop branch, create a post, then push it up to develop. Let the pipeline do its thing and review your dev site.

Assuming you have a similar pipeline setup as I have for this site in ADO, you will have a new site generated at your development repo in GitHub. You then need only link up the render.com site to GitHub and the repo you just updated and your site will ‘render’ pretty quickly thereafter.

Git Merge to Production

To promote your draft to production simply merge to master and push your changes to GitHub.

This will publish to ./docs of your deployment repo.

On Static Site Hosting: For my blog I use GitHub pages along with Cloudflare so I can get some nice stats around visitors and, simply put, I’ve come to respect Cloudflare from personally working with them in the past. If you use GitHub Pages, you will have to update the deployment repo configuration settings to include the ./docs deployment path and setup the pages hosting. My pipeline automatically creates the CNAME entry in your release artifact that is needed for this to work properly with alternate domain names as well.

Final Thoughts

The entire process for pipelining a Hugo static site is certainly a fun little side project. Static site generators are extremely DevOps friendly. I highly recommend anyone looking for more DevOps acumen give it a shot for their own site. You will hone or even learn foundational DevOps knowledge in several areas including:

- Version Control (git)

- Branch management

- CI/CD

- Pipelines

- Cloud integrations

- DNS

- Webhooks/Triggers

I don’t know if I should be proud or depressed that over 10 years of original site content can be compiled into a static site in under a minute. An entire pipeline deployment is not quite necessary for a simple static site for a personal blog. Render.com will build and host it for you in a jiffy without much of this packaging and pipelining of code (there is even render.yml declarative manifest files you can drop in your repo to automate that process as well). But the moment you are not the sole contributor to a project things become easier to manage with pipelines that clearly divide CI and CD with immutable artifacts in between.

And that’s about all I have for this post. Only one real post in this year but I promise I’ve got more to come as I round back on all I’ve learned in my deep diving into the world or Kubernetes, DevOps, and infrastructure as code in the last few years.