RDBMS vs NoSQL and How the Database World is Changing

Over the past year I am getting asked more and more about NoSQL. How do I feel about it? Have I set it up? Are there benchmarks? Let’s go over some history below and hopefully we can get an idea of where the technology is moving.

This will be at a high level, and I would be more than happy to explain all phases in great detail if you email me. Let’s explain this in three/four phases.

Phase 1:

When computers first took off there was a need for a database right away. At this time, data was incredibly small and computers were in their infancy. Databases were also organized in flat file systems with many variations. I was fortunate to work on VMS RMS databases. Back in those days you would have columns with fill to make out the structure. Below is an example

As you can see we had to account for the “fill” here. So in your DCL or whatever code would have to represent:

First Name 0 -18

Last Name 19 – 41

Emp # 42 – 50

As you can imagine as more tables and data got added the more convoluted it became.

When I first got out of Northwestern, my job was to convert and move data from VMS RMS into an Oracle 8i database. I used a product called Powerhouse QTP to handle this. Lots of companies during this time were starting to see the issues of flat file architecture.

Due to the limitations of flat file systems, developers at IBM Research Lab started to create the relational model with a memory/cache component and a language called SQL to interact with the RDBMS.

Note: keep in mind not everyone converted to RDBMS right away. There were lots of companies that took the wait and see approach. We are seeing this right now with NoSQL/NewSQL architecture. In fact, I was converting RMS data to Oracle in the late 90s.

Phase 2:

Introduction of the relational model!

Edgar Codd noticed there was an issue with flat file architecture early on and decided to create a memory segment with stored data similar to a LIFO/FIFO stack. He figured out that recently used data would probably be called again. Codd also realized that there would need to be a coding language to interact with the database. He came up with structured query language (SQL). There are probably more people than just Codd but he gets the credit for it so bare with me.

Codd also realized that most people would be loading data from traditional flat file systems so he knew he had to make the cost savings on the load and not on the query side. Basically, load the rows sequentially and in a normalized form. Since data was small, a full table scan was not a huge deal. In all fairness, there is no way Codd could have ever imagined a billion row table. Also, NoSQL/NewSQL fans have to remember during this time almost everything was 32-bit architecture. NoSQL/NewSQL concepts do not work or would not work during this time.

In the simplest form a relational model/database is:

- Organized table structures with relationships. Meaning tables have primary and foreign key relationships and are normalized. Normalized for non-data people means to minimize duplicate data with relationships

- Create a memory component trying to limit disk I/O calls.

As RDBMS databases got bigger and bigger, vendors like Oracle, SQL Server and IBM would add stuff to make queries faster. They would alter the optimizer on every version to look at faster paths to data. They would add things like partitioning, materialized views, pinning and bitmap indexes. There are other things but we get the idea. Due to the 4 gig memory limitation with 32-bit architecture, traditional RDBMS databases were starting to feel the heat. Then 64-bit architecture gave RDBMS databases a pass for a while because you no longer had the 4 gig limitation and could store much more data and execution plans in the buffer.

Even with recently used data being stored in the buffer and common SQL plans/paths being stored in memory, RDBMS was not keeping up with the demand. As more and more data was being added and all options like partitioning, correct indexing, IOTs and materialized views were exhausted, we needed to look for other options. Also, downtime is not acceptable today.

Not just query speed but also RDBMS disaster recovery solutions needed work. I have personally worked on Oracle’s DataGuard and have set it up many times. It is a good DR solution but it is not seamless. Even if you set fast-start failover there are some limitations. Oracle RAC is not cross location and is mainly for hardware failover. RDBMS databases are not designed to handle clustering very well and the cost for the RAC solution is out of hand so there are a lot of companies that opted out of it. Even with TAF (transaction application failover) setup you still only get seamless failover on selects. Your DML will rollback.

With all the above limitations, developers started to rethink the model and we entered into the most recent phase.

Phase 3/4:

Introduction of NoSQL and NewSQL! I want to be clear that NoSQL means “Not Only” SQL. Some people get confused by that.

I say phase 3 or phase 4 because some people might want to add OLTP as a phase. To me it can go either way.

As the limitations above start to manifest themselves and production databases can’t have any downtime, developers started to think about how to solve these issues.

One issue that a lot of developers complain about is converting relational data into in-memory to do work. NoSQL allows for you to handle this without the conversion. In the old days this was not a huge deal. Now when we are talking large amounts of data with calculations being executed we can see why this demand introduced itself.

Unlike the old days, developers today in the open source community are saying let’s pass the cost savings on the query and not on the load. NoSQL databases are broken up into key, document, column and graph design. In a column store we have data-organized architecture. Traditional RDBMS fans will say “what about if you have multiple style queries going against the same table?”. In a column store, the other queries will suffer since the table is query ready. This is true, but it has been my experience over the years that the same queries are run over and over again. Ad-hoc queries are very rare and you should not design accordingly.

I get asked which model to use? Key, document, column or graph? The truth is that it depends on your need. SAP HANA which is kind of like a hybrid, decided to go with a column based architecture where calculation views can be created in the modular perspective. SAP HANA also allows for old RDBMS row-based architecture. The point of bringing up SAP HANA is I think we are going to start to see these hybrid databases.

Developers in NoSQL also decided to move to a horizontal scaling approach since they knew that vertical scaling (CPU,RAM, etc) is getting exhausted. They knew that by doing this you would solve two problems. You can span the work load across multiple machines and add larger servers on the fly while accomplishing a true 24/7 environment.

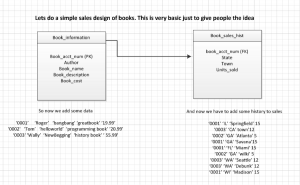

Let me show you an example of a basic Column Store: (The goal here is to give non-technical people an idea)

So in the traditional RDBMS way if I wanted to know how much money we made in “GA” for the “hello world” book, I would have to link the primary key and foreign key with a join on 0002 and then calculate units sold in table B with cost of book in table A. In this case this would be so easy to do since there is little data.

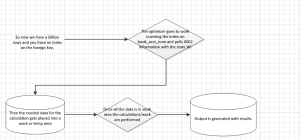

Now lets imagine there are over a billion rows in sales history and we want to know how much account 0002 sold in WI. So now the RDBMS optimizer has to find the path to data and then pull all of these rows into a work area and then do a calculation. Keep in mind this will have cost associated with it. We have to go through and find all the data and then get it ready for the calculations.

Example:

Note: keep in mind RDBMS vendors have added things like parallel query, partitioning, etc. to make this work easier.

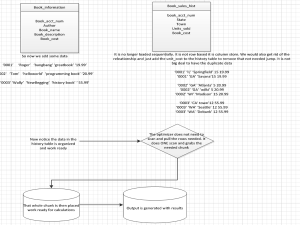

However, anyone who knows architecture can see where this would be over-worked and an issue. NoSQL addresses this problem with basic concepts. Why not have the data organized! If we had to do this same query, it would perform much faster because we would already have the data organized. The data is now column stored.

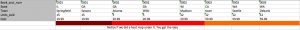

I had a request to show how the column store would look with this table. Since we would do the store of act_num it would look like this. Notice we basically just flipped the column to be data ready. The concept is so basic you wonder why it took so long for it to come into fruition.

We can easily see the benefits here. This is in the basic form so non-technical people can get the idea. There is also benefits on how memory is used and scaling but this is just a simple explanation on how the database world is progressing.

Conclusion:

NoSQL/NewSQL is here to stay. NoSQL/NewSQL handles the application needs better and I have yet to see a developer who has been disappointed by them. The big players are gobbling up the concepts because they also see the benefits which I have also personally seen and experienced. At the very least the query ready architecture is amazing. Space is cheap so who cares if you have duplicate data or spread partitions. The speeds you get with this scaling and architecture are too great to dismiss. The last benefit and most important is that it is Open Source. A whole community, including me, help to solve different issues. You have the best of the best looking into your problem. Some of the best support I have ever gotten was with Horton. It really felt like they cared about my problem.

I am not saying to go ahead and drop your RDBMS database now and convert. I am pretty sure we are going to start to see hybrids like SAP HANA.

Like anything I want you to really think about the benefits and progress accordingly. Do not only go with Oracle because you know it. Do not only go with SQL Server because you know it. Really look at the problem and see what is the best architecture for your application. I promise you happiness in the long run with this approach.

Thanks for reading. Check out SPR’s Data Expertise page to learn more.