Keeping Xunit Tests Clean and DRY Using Theory

“Keep your tests clean. Treat them as first-class citizens of the system.”

~Robert “Uncle Bob” Martin

Nearly every developer understands the importance of keeping the code repo clean. It is in this spirit I call attention to Theory tests, a feature in Xunit that encourages writing reusable tests while helping maintain Don’t Repeat Yourself (DRY) principles.

Introduction

Let’s unpack clean code. It makes code more readable to the developer, and makes refactoring tasks easier to accomplish. It also discourages bad practices in developers that would produce code that is buggy and difficult to fix. Good practice says to keep production code clean and DRY. It’s equally important for the test code that accompanies our projects to be clean and DRY as well.

After all, we rely on our test projects to validate new code we write and protect existing code from new bugs introduced by refactoring. We also rely on test projects in our CI/CD pipelines to help prevent bad code from being committed.

Fact vs Theory

In an Xunit test class or fixture, there are two kinds of tests: Fact tests and Theory tests. The small, but very important, difference is that Theory tests are parameterized and can take outside input. Fact tests, however, are not parameterized and cannot take outside input.

For Fact tests, you must declare your test input and expected assertions directly inside of the test. This is perfectly fine for most test cases. And a vast majority of your tests will likely be simple enough in logic to test inside of a Fact test method. Technically speaking, you could use member or static data to share between fact tests inside of a class, but that wouldn’t be a good idea.

Facts first

Consider a scenario where you want to write a test for a method that accepts a single parameter (i.e. an ID) and returns a given result. You could write an individual Fact test for each input you want to validate. However, the approach isn’t very DRY. Plus, any refactoring of the method would need to extend out to each test, as well.

You could consider writing your logic to test inside of a loop, and just iterate over the various inputs. This would work fine when all tests are passing. But in the event a particular input results in either a failed assertion or an exception, the test execution stops and any remaining inputs to test against are not run. What complicates things more, is that without writing any additional code to log or output what input we are testing against, we will not know what the offending input is. This can be frustrating when debugging to uncover what is causing the test to fail in a CI/CD pipeline as well.

A helpful Theory

If we were to refactor our tests to use parameterized Theory tests in Xunit, we can achieve our goal of writing DRY tests that can take multiple inputs, while sparing us from the aforementioned drawbacks and headaches that Fact tests would typically present. A Theory test would look similar to what we would have written for a fact test. It differs when we add an input parameter (the input for our logic we are testing against) and an expectation parameter to pass in the expected result to use for our test assertion. We supply test data and expectations to our Theory tests using method decorators InlineData, MemberData, or ClassData that can be found in the Xunit namespace. Which type of decorator to use really depends on the quantity of data you want to use and whether or not you want to reuse that data for other tests.

No matter which type we use, all theory tests are declared in the same way. Consider the following test declaration for our method TestSum:

[Theory]

[InlineData(1,2,3)]

public void TestSum(int a, int b, int expectedResult)

Here we declare our test using the [Theory] decorator with 3 parameters. Parameters a and b define the data we would like to pass in to our test. expectedResult represents our eventual assertion at the end of the test. We then define which Data type we want to use (InlineData in this case) and supply the appropriate parameters. We can define multiple data decorators for each test, and are not limited to only using a single type. For example, the following is a perfectly valid declaration:

[Theory]

[InlineData(1,2,3,6)]

[InlineData(1,1,1,3)]

[MemberData(nameof(MyDataPropName))]

public void TestSum(int a, int b, int c, int sumTotalResult)

The only constraint here is that the number of arguments in the Data item and test parameters must match. For example, the following test will throw an exception and fail every time it is run:

[Theory]

[InlineData(1,2,3)]

public void TestSum(int a, int b, int c, int expectedResult)

Data Types

Theory data at its core is stored in a ICollection<Object[]> data structure, where each Object[] in the ICollection represents the set of values you want to use for the test’s parameters. We define our data using the following types:

- InlineData(params object[] data): Defines test arguments directly on the method itself inside of the constructor.

- MemberData(string memberName, params object[] arguments): Used for instances where we want to define our test data separately from the test, either as a member type or method that returns a type of ICollection<object[]>. If we want to use a method to supply our test arguments, we can declare parameter inputs for that method (if any) here as well. We can also tell Xunit where to look for this member if it’s not declared in the same test class that the test is. Examples of usage:

- [MemberData(nameof(MyDataProp))]

- [MemberData(nameof(MyDataMethod), parameters: new object[] {1,2})] (Where MyDataMethod looks like MyDataMethod(int a, int b))

- [MemberData(nameOf(MyOtherDataProp, MemberType = typeof(Foo.BarClassType)]

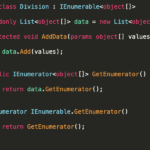

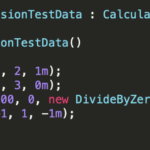

- ClassData(Type type): Tells Xunit to use an instance of the class provided in the type parameter for our data. To use, we must define a class that implements

ICollection<object[]>, along with a class that implements that class, to create our test data in its constructor. Below is an examples of how we can implement aClassDatatype:

InlineData types are best used to easily define edge cases to test against. They are not as good for testing large data sets.

MemberData types are better suited for large data sets. I personally prefer using MemberData when writing my Theory tests.

ClassData types offer the most portability. This is useful if we want to be able to reuse the same test data across multiple test methods and test classes. There is one drawback, as compared to InlineData and MemberData types, when we run tests inside of Visual Studio. More about that later.

Test Representation

With Theory tests, Visual Studio creates an individual result entry for each Data item, complete with the parameter values used for the test. Let’s take another example of a multiple InlineData test:

[Theory]

[InlineData(1,2,3,6)]

[InlineData(1,1,1,3)]

public void TestSum(int a, int b, int c, int sumTotalResult)

When this test is run inside Visual Studio, we get the following Pass/Fail result (assuming our test class is under the following namespace and classname “MyTests” and “SumTests”, respectively):

MyTests.SumTests(a:1, b:2, c: 3, sumTotalResult: 6)

MyTests.SumTests(a:1, b:1, c: 1, sumTotalResult: 3)

Here we can easily see the values used for the test, Pass or Fail. It also will not block other test executions of the same test method. As of this writing, Visual Studio will not create multiple test entries for Theory tests where ClassData types are used.

Closing remarks on Theory tests

Theory tests are a great way to test a set of logic using a large dataset. It also provides an easy mechanism for declaring and reusing our test data. Plus, it’s also a great way to keep your tests clean and DRY. I highly recommend trying them out with your next Xunit test project. Happy Testing!